In this article, we're going to introduce you to the fascinating world of web scraping and automation. You'll learn how these technologies can transform the way you access and analyze online data, the challenges and solutions related to navigating website protections, and how TakionAPI can be your ally in efficiently overcoming these barriers. Whether you're new to the concept or looking to deepen your understanding, this guide is your starting point into mastering the art of web data extraction.

Web Scraping and Automation Techniques

Web scraping and automation can be executed in two primary ways: directly via HTTP requests or through automated browsers. Utilizing direct HTTP requests is advantageous because the scripts are lightweight in terms of memory and RAM usage, faster, and capable of operating more tasks simultaneously. However, the challenge lies in emulating human interactions by generating the same cookies and using the correct headers to mimic a human user's flow through a website.

On the other hand, browser automation simplifies development, offering a more straightforward approach to emulate human actions on the web. This method, however, tends to consume significantly more memory and RAM. Despite its development speed, it's becoming increasingly detectable by modern anti-bot systems, making it less effective for bypassing sophisticated website protections.

Deep Dive into Real-life Examples of Web Scraping and Automation

Let's delve deeper into how web scraping and automation are applied in real-life scenarios, offering tangible benefits and challenges.

Sneaker and Ticket Bots

The sneaker and ticket industries are prime examples of markets heavily targeted by automation bots. These bots are designed to purchase limited edition sneakers or tickets for concerts and sports events the moment they become available online. The high demand and limited supply of these items make them perfect targets for resellers looking to capitalize on secondary markets.

For sneakers, bots navigate through purchase processes faster than any human could, securing highly sought-after releases from brands like Nike or Adidas. Once acquired, these sneakers can be sold at significantly higher prices on platforms like eBay or StockX, generating substantial profits for the bot operators.

In the ticketing sector, bots operate similarly by swiftly purchasing tickets for high-demand events. This practice often leads to rapid sellouts, forcing fans to turn to resale platforms where tickets are offered at inflated prices. The use of such bots not only impacts consumer access to these products but also raises ethical and legal questions regarding fair market access.

E-commerce and Price Monitoring

In e-commerce, web scraping is used extensively for price monitoring and market analysis. Bots can track pricing errors or changes on platforms like Amazon, enabling businesses to adjust their pricing strategies in real-time to stay competitive. For instance, an automated system might monitor a competitor's product listings for price reductions, stock levels, and promotional activities, providing invaluable data for strategic decision-making.

Price error monitors exploit discrepancies in pricing, where automated systems alert users to significant price drops or mismatches, allowing quick purchase before corrections are made. This tactic is particularly prevalent during high-traffic sales events like Black Friday or Cyber Monday, where pricing errors are more likely due to the volume of changes being made.

Sneakers/Tickets Monitors

Beyond purchasing, monitoring bots play a crucial role in the sneakers and tickets markets by tracking inventory availability. These bots send real-time alerts when limited products become available or when tickets are released, giving users an edge in acquiring them. This capability is vital for enthusiasts and resellers alike, who rely on timely information to secure these coveted items.

Security Measures Against Unauthorized Scraping

Websites implement a range of security measures to protect against unauthorized scraping and automation. These measures are designed to differentiate between human users and bots, ensuring that only legitimate traffic accesses their resources.

CAPTCHAs

CAPTCHAs are one of the most common defenses, presenting challenges that are easy for humans but difficult for automated systems to solve. These can range from text recognition in distorted images to identifying objects in pictures or solving simple puzzles. CAPTCHAs serve as a first line of defense, deterring basic or poorly designed bots from accessing site content.

Rate Limiting and Traffic Analysis

Websites also employ rate limiting, where the number of requests from a single IP address is restricted over a given timeframe. Traffic analysis further enhances this by monitoring for patterns indicative of bot behavior, such as rapid page requests, unusual navigation paths, or header inconsistencies. These techniques aim to maintain website performance and prevent data scraping that could lead to bandwidth overuse or server overload.

Advanced Anti-bot Measures

More sophisticated anti-bot measures involve deep analysis of user behavior and browser characteristics. Companies like Akamai, Cloudflare, and Datadome specialize in developing complex algorithms that monitor various indicators of bot activity.

Behavioral Analysis and Fingerprinting

These systems analyze mouse movements, keystroke dynamics, and browsing patterns to identify automated scripts. Fingerprinting techniques further scrutinize browser and device characteristics, looking for anomalies that suggest non-human interaction. These methods can detect even the most advanced bots, making unauthorized access increasingly challenging.

Token and Cookie Validation

Token and cookie validation are crucial components of modern anti-bot strategies. Websites generate unique tokens or set specific cookies during user sessions, which must be presented correctly in subsequent requests. Analyzing how these elements are handled allows websites to identify and block bots that fail to mimic authentic user behavior accurately.

From Theory to Practice, Datadome

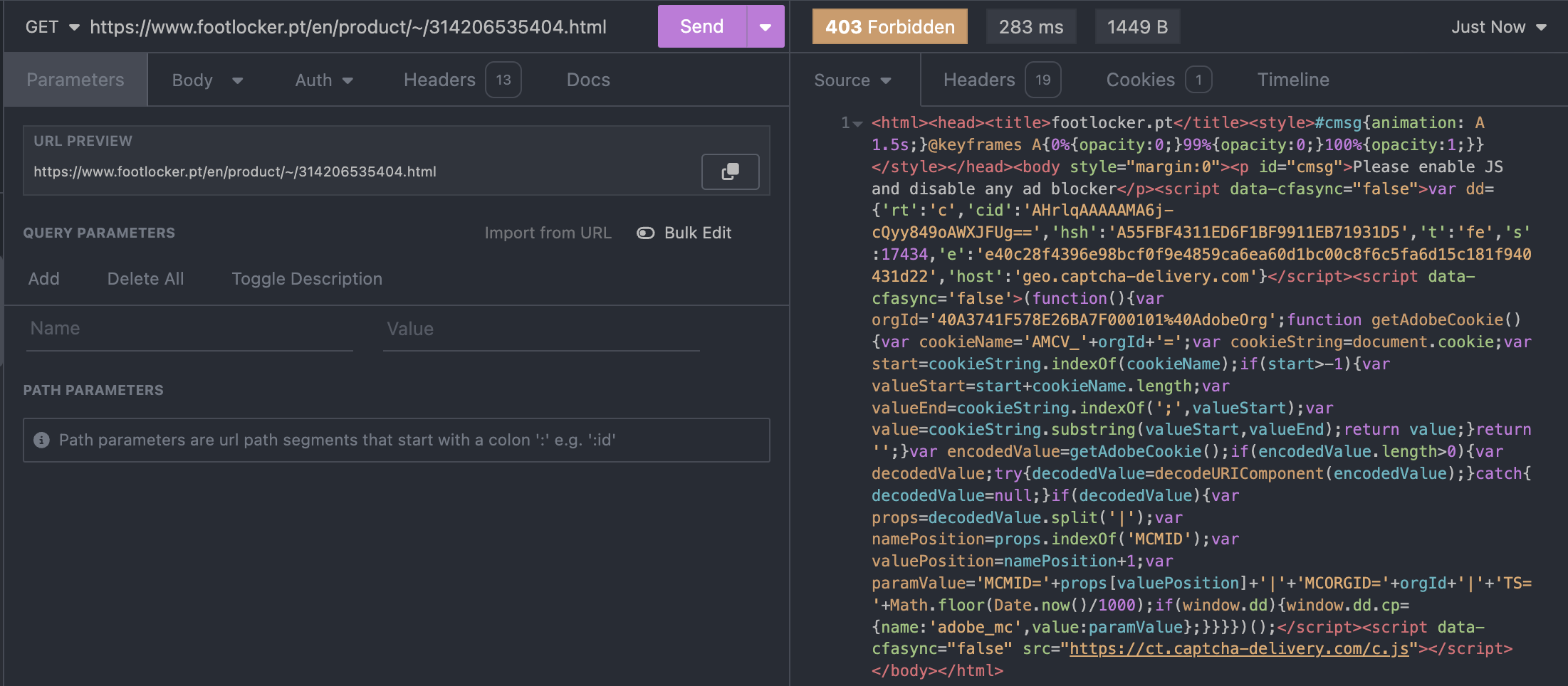

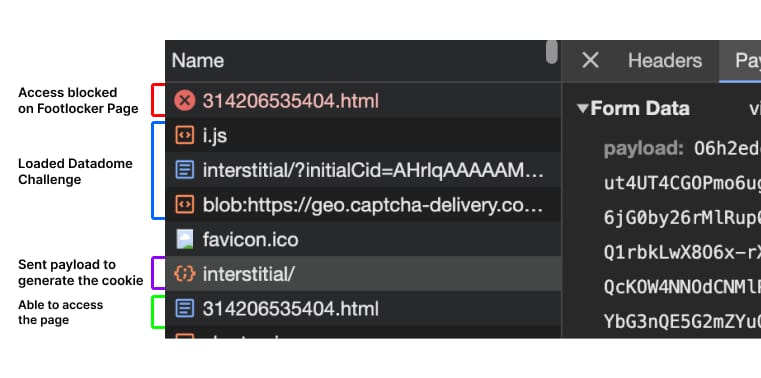

Let's delve into a tangible example to elucidate the complexities of web security measures. Imagine attempting to access a specific product page on Footlocker, like "https://www.footlocker.pt/en/product/~/314206535404.html", via direct HTTP requests. Intuitively, one might expect to directly receive the HTML content of the page. However, the reality deviates from expectations as you're met with a Datadome challenge instead.

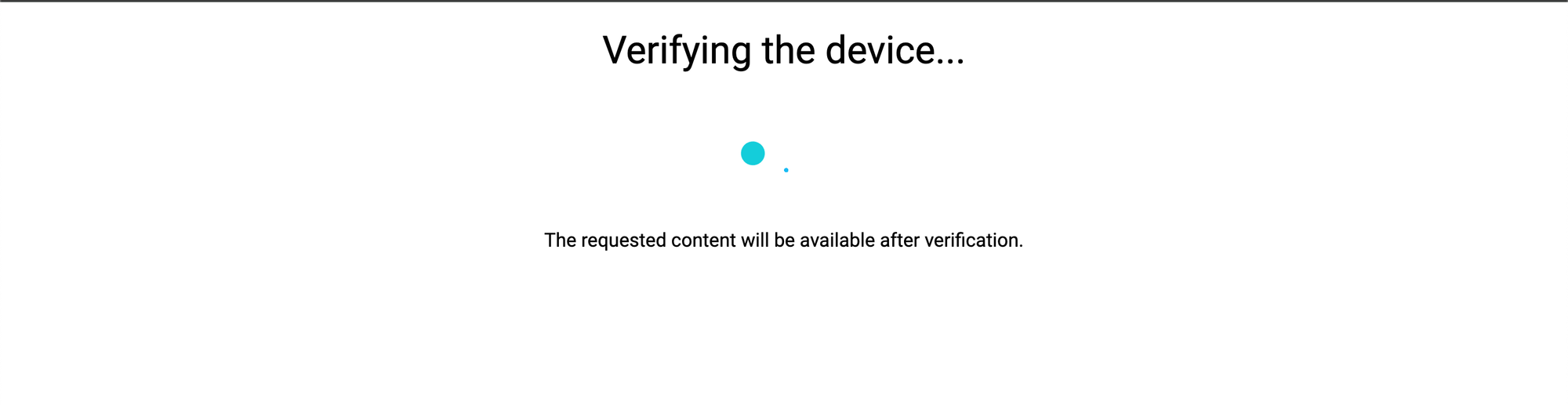

This Datadome challenge acts as an initial safeguard, functioning similarly to a pseudo Web Application Firewall (WAF), whose primary goal is to deter automated bot access. Successful navigation through this challenge is rewarded with a 'datadome' cookie, a key that unlocks access to the sought-after product page.

For those exploring via HTTP requests, the task becomes to recreate the encrypted payload and generate a valid cookie upon facing this challenge. Meanwhile, automated browser users must strive to avoid being flagged as a "bad" device.

Circumventing Web Security Measures

Despite robust security measures, techniques have been developed to circumvent these protections, highlighting a continuous cat-and-mouse game between bot operators and website administrators.

Reverse Engineering JavaScript

Advanced bots engage in reverse engineering of JavaScript code used by anti-bot mechanisms. By understanding how tokens are generated and validating server-side checks, developers can adapt their bots to mimic human-like interactions more closely, avoiding detection.

CAPTCHA Solving Services

CAPTCHA solving services like 2Captcha or AntiCaptcha provide a human-powered solution to pass CAPTCHA challenges. While effective, this approach is not without its drawbacks, including potential delays and costs, especially when scaling up operations.

Utilizing Solving APIs

The evolution of anti-bot technologies has spurred the development of specialized APIs designed to navigate around these security measures. Services like TakionAPI offer sophisticated solutions that handle token generation, CAPTCHA solving, and emulate genuine user behavior, enabling seamless data access even in the presence of advanced anti-bot protections.

These methods represent the forefront of the ongoing battle between web security and access, illustrating the lengths to which both sides will go to protect their interests or access data. As technologies on both fronts continue to evolve, the strategies employed to scrape data or protect it will undoubtedly become even more sophisticated.

Introducing TakionAPI

Here it comes TakionAPI, where we specialize in providing advanced solutions for overcoming web security measures, including a wide array of captchas and anti-bot protections. Over the years, our dedication to the study of web security and continuous service enhancement has enabled us to develop sophisticated AI models. These models ensure our services remain at the forefront of technology—fast, reliable, and always up-to-date.

Benefits of Using TakionAPI:

- Efficiency and Speed: TakionAPI reduces the time and resources required to collect data from protected websites, enabling faster decision-making and streamlined operations.

- Scalability: Designed to handle requests at scale, our APIs support the growth of your data collection efforts without compromising performance.

- Reliability: With TakionAPI, users can trust in the continuous access to data, minimizing the risk of being blocked or facing downtime due to anti-bot measures.

- Cost-Effectiveness: Eliminate the need for expensive and time-consuming manual CAPTCHA solving or the development of in-house solutions.

Example Usage:

Consider the scenario of creating a competitive analysis tool that requires data from various eCommerce platforms, which are protected by rigorous anti-bot measures. By integrating TakionAPI, your application gains unhindered access to essential data, such as pricing, availability, and market trends. This not only ensures your tool operates without disruptions but also keeps your business ahead with the latest market insights.

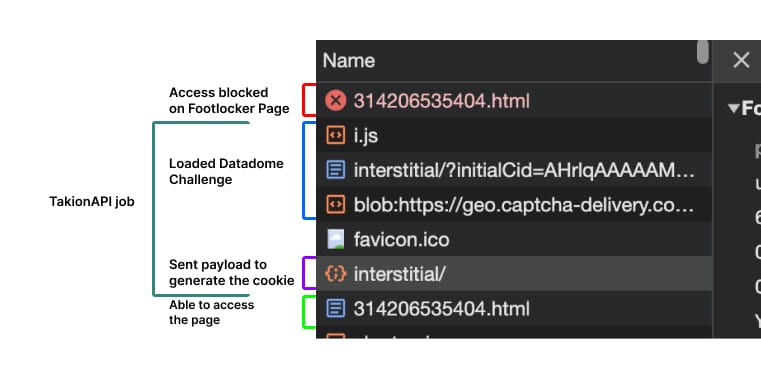

Talking about the previous example, facing the Datadome Interstitial challenge becomes a non-issue with TakionAPI. Our APIs handle the intricacies of challenge solving and cookie generation seamlessly. Your role is simply to incorporate an API call to our servers or utilize one of our client libraries for Python, NodeJS, or Golang, streamlining your data collection process.

Get Started with a Free Trial:

We understand the diverse challenges our clients face, which is why we offer a free trial to showcase the power of TakionAPI. Join our community on Discord to begin your trial, access expert support, and connect with peers navigating the web scraping and automation landscape.

Experience the transformative capabilities of TakionAPI and revolutionize your approach to accessing web data. Dive into our documentation to learn more and join our Discord community to kickstart your journey with TakionAPI.

Member discussion: